As anyone who takes a look around the interweb can tell you though, there is a pretty insidious type of data visualization that's been flooding our society.

Oh yes, I'm talking about the infographic.

While sometimes these are endearing and amusing, they are often terrible, misleading and ridiculous. I was going to formulate some thoughts on why they were terrible, and then I found out that Megan McArdle already had in a column for the Atlanic. It's a pretty good read with lots of pictures. Her summation at the end pretty much says it all:

If you look at these lovely, lying infographics, you will notice that they tend to have a few things in common:

- They are made by random sites without particularly obvious connection to the subject matter. Why is Creditloan.com making an infographic about the hourly workweek?

- Those sites, when examined, either have virtually no content at all, or are for things like debt consolidation--industries with low reputation where brand recognition, if it exists at all, is probably mostly negative.

- The sources for the data, if they are provided at all, tend to be in very small type at the bottom of the graphic, and instead of easy-to-type names of reports, they provide hard-to-type URLs which basically defeat all but the most determined checkers.

- The infographics tend to suggest that SOMETHING TERRIBLE IS HAPPENING IN THE US RIGHT NOW!!! the better to trigger your panic button and get you to spread the bad news BEFORE IT'S TOO LATE!

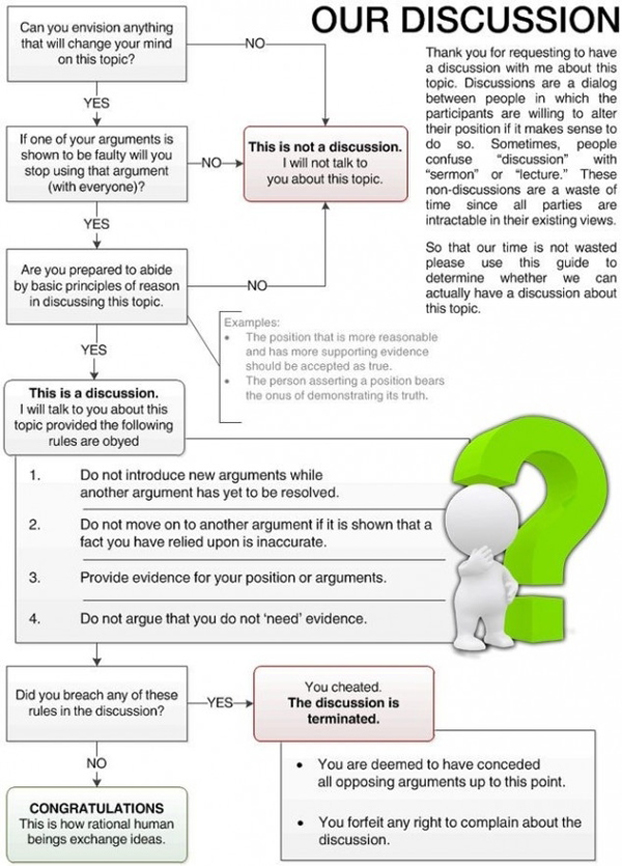

If that's too many words for you though, she also includes this graphic:

So while the infographic can be quite useful when tamed and sedated, if you meet one in the wild, be very very careful. Do not approach directly, do not look it in they eye.

Friends don't let friends use lousy infographics (I'm looking at you facebook).

Friends don't let friends use lousy infographics (I'm looking at you facebook).